An Overview of Data Warehousing

The data warehousing is a fundamental aspect of modern business intelligence, focused on transforming raw operational data into structured information that supports effective decision-making. At its core, data warehousing is the process of taking data from various legacy and transaction database systems and transforming it into organised information in a user-friendly format to enable and encourage data analysis and support fact-based business decision making. A key principle is to align the data warehouse with the business needs, which are the information requirements of the end users.

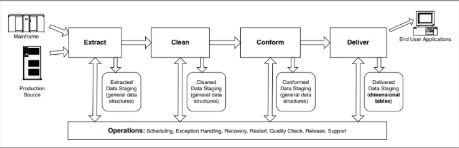

The architecture of a data warehouse typically comprises two main components: the back room and the front room. This article primarily focuses on the back room, which is where data is prepared. The front room, or presentation area, is where end users access the transformed data for querying and analysis.

The process of transforming data from its original format to a dimensional data store, often referred to as the Extract-Transform-Load (ETL) system, accounts for a significant portion (around 70%) of the time, effort, and expense of most data warehouse projects. This article expands the traditional ETL steps into extract, clean, conform, and deliver.

The key steps towards a data warehouse, particularly through the ETL process:

Extracting

This initial step involves reading and understanding the source data from various operational systems. These source systems can be heterogeneous, including mainframes, relational databases, XML sources, flat files, Web logs, and ERP systems. Before physical ETL development begins, a logical data map should be created. This map document outlines the relationship between the original source fields and the final destination fields in the data warehouse, acting as a functional specification for ETL developers. The goal of extraction is to capture the raw data, usually writing it to disk with minimal restructuring to ensure speed and allow for restarts if necessary.

Cleaning

Once the data is extracted, the next stage is ensuring data quality and consistency. This involves defining data quality and addressing issues to make the data accurate, meaning that the values and descriptions truthfully and faithfully describe their associated objects. Data profiling is a crucial step to this step, employing analytic methods (EDA) to understand the content, structure, and quality of the data in the source systems. Data anomalies are detected and addressed during this phase.

Conforming

This step is required whenever two or more data sources are merged in the data warehouse. Separate data sources cannot be queried together unless their textual labels are identical and similar numeric measures have been mathematically rationalised. Conformed dimensions and conformed facts are central to this process, ensuring a consistent representation of data across the enterprise and allowing for integration. This requires an enterprise-wide agreement to use standardised domains and measures.

Delivering

The final back-room step is physically structuring the data into a set of simple, symmetric schemas known as dimensional models, or star schemas. These schemas consist of fact tables, which contain numerical measurements, and dimension tables, which provide the descriptive context surrounding those measurements. This structure significantly reduces query times and simplifies application development. The presentation area of the data warehouse houses these dimensionally modelled tables, which are then directly accessed by query tools, report writers, dashboards, and OLAP cubes.

Example:

Consider a retail grocery chain that wants to analyse its sales data.

- Extract

The ETL process extracts transaction data from the Point-Of-Sale (POS) systems in each store. This data might include details like transaction ID, product code, quantity sold, price, and date/time.

- Clean

The extracted product codes might have inconsistencies (e.g., slightly different descriptions for the same product across different stores). The cleaning process standardises these product descriptions and corrects any data entry errors identified through data profiling.

- Conform

The data warehouse might also pull customer information from a separate loyalty program database. To analyse sales by customer demographics, the customer identifiers from the POS system are conformed with the identifiers in the loyalty database using record matching logic. This ensures that a single view of a customer can be used across both datasets.

- Deliver

Finally, the cleaned and conformed sales data is loaded into a sales fact table, which contains the transaction metrics (e.g., quantity sold, sales amount). This fact table is linked to dimension tables such as a product dimension table (containing standardised product information) and a customer dimension table (containing conformed customer information). This dimensional model allows business users to easily query and analyse sales performance by product, customer segment, store, and time.

The data warehousing provides a means for transforming operational data into analytical information. By following key steps like extracting, cleaning, conforming, and delivering data into dimensional models, organisations can build a reliable foundation for decision making.